The AI Infrastructure Gigacycle: A Primer for 2026

Note for paid subscribers: As we kick off the Dilligence Stack, I felt we needed to publish some anchor reports to set the foundation we will build upon. So, this report is quite long but has needed depth in each section. Each section will get its own deep dive in the coming months as well. Not all reports will be so long.

For free subscribers, our goal is to still provide value and insights in the free 600-800-word report primer. On to the report.

The global semiconductor market is on track to reach $975 billion in 2027—within striking distance of the $1 trillion milestone originally anticipated for 2030. The sheer upside of this statistic seems only limited by fundamental constraints in the supply chain. This is an unprecedented moment in the semiconductor industry. One where countless conversations we have had with executives have yielded essentially the same comment/sentiment: “I’ve never seen anything like this in my 30-40-year career in this industry.”

In our view, the analytical framework for 2026, and even extending to 2027 (and longer), centers on five structural dynamics: the sustainability of the capex cycle, the shift from compute to energy as the binding constraint, the economics of inference versus training, the emergence of custom silicon at scale and said dynamics on merchant AI accelerators, and the physical bottlenecks in memory, storage, and advanced packaging that will gate deployment regardless of demand.

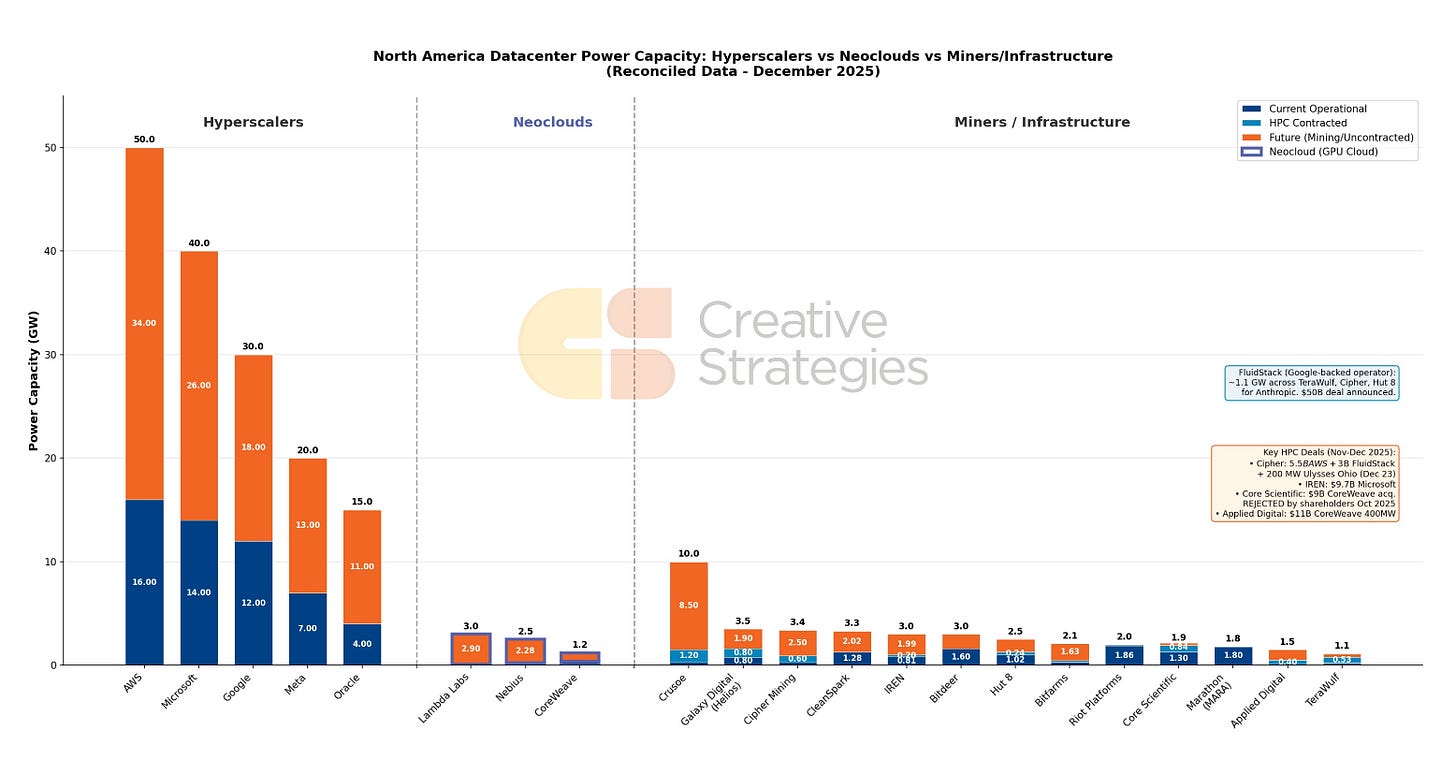

The most consequential shift is the constraint migration from compute to energy. For the past two years, GPU availability has dominated strategic planning across the ecosystem and has been a primary driver of greenfield specific-purpose AI datacenter builds. By many estimates, the U.S. data center power demand will grow 3.4x by 2030, reaching ~604 TWh and accounting for 13.2% of national power consumption. Gas turbine orders are at a decade-high level, forcing a 2-3 year backlog. Transformers have multi-year delays, and the battle for access to the grid is a 2-3-year backlog, and many secured contracts still may not go through to electrification. The entire time to power debate has shifted to the viability of behind-the-meter solutions. The companies that secure reliable power access gain structural advantages that chip allocation alone cannot offset. Many will try, many will fail, and tracking the GW (power/utility industry dynamics as a whole) has now become a central exercise for the AI infrastructure sector. *While I am not a power/utilities analyst, I have access to enough research and friendlies in the space that I have caught up very quickly, and this is a primary pillar we are tracking with the lens on the impact on AI infrastructure.

The inference transition is equally a structural shift to watch. Training workloads favor maximum-performance accelerators (and GW-class data centers) with high memory bandwidth, rack-scale solutions, and leading performance per watt —NVIDIA’s and AMD’s core market. Inference workloads will create demand across a broader range of silicon, from datacenter chips to edge processors. Both inference and training are causing the basis of AI accelerators to move to rack-scale design, something that has huge implications for the software industry and competition. As inference costs decline, consumption increases faster than cost reductions—a dynamic that expands total compute demand even as unit economics improve. We are still in the early cycles of AI adoption, and token cost declines are a key metric in accelerating AI adoption as a whole. While we are not entirely at the inference-only product stage for AI accelerators, we believe that time will come rapidly, and we expect that we will see different architectural decisions made for dedicated inference AI accelerators (and different datacenter designs for inference-only AI workloads) as the market fragments into more specialized AI hardware products. When this dynamic happens, we believe some of the fundamentals of competition will change as well.

Custom silicon is the structural competitive question for merchant GPU vendors. The ASIC market will grow over 50% in volume with ASPs increasing 75-100%, driving TAM growth exceeding 100%. Google is deploying seventh-generation TPU super pods, and Amazon’s third-generation Trainium, also a rack-scale solution, will come this year. The market is bifurcating: custom silicon for predictable internal workloads (and a few mega customers), merchant silicon for flexibility and external customers. The dominant metric we will watch, which will be the most telling, is performance per dollar per watt on all competitive AI accelerators. Our forecast by accelerator, via our AI accelerator simulator model, is included in the full report for subscribers.

High-bandwidth memory remains sold out through 2026 (and possibly beyond). The storage industry is facing similar dynamics, with customers already booking 2027 orders. In memory, we believe there are structural differences in this cycle compared to others, where cyclicality may not impact all categories of memory demand.

TSMC’s advanced packaging capacity remains severely constrained despite 60-70% expansion. Broad shifts in customer priorities require looking at TSMC through a new lens than the way we looked at it in the mobile (monolithic SoC era).

The main point for the semiconductor industry, and the surrounding supply chain, is: constraints exist as far as the eye can see. Understanding where bottlenecks are, how they shift competitive dynamics, and where those constraints open opportunities for competition and new players will be an ongoing exercise.

In the full analysis below, paid subscribers get:

The Funding Question — Why the cash-flow structure of this cycle fundamentally changes the failure mode compared to previous technology bubbles, and what that means for cycle duration

The Constraint Shift — How energy replacing compute as the binding constraint reshapes competitive positioning, deployment timelines, and geographic strategy

The Inference Transition — Where the Jevons Paradox dynamic is creating demand growth that exceeds capacity expansion, and implications for the semiconductor value chain

Physical AI — Why 2026 is the breakout year for robotics and autonomous systems, and the distinct silicon requirements for embodied AI

The Memory Bottleneck — How HBM4 supply constraints cap total accelerator deployments regardless of chip availability. Insights into why this memory cycle is different and bears similarities to logic.

TSMC N2 — The compound advantage of leading-edge process plus advanced packaging, and why it cannot be replicated

The ASIC Question — What hyperscaler custom silicon adoption means for market bifurcation and merchant GPU positioning

The Companies That Matter — A framework for tracking execution across hyperscalers, chip designers, memory suppliers, and foundries with specific variables to monitor

The full analysis continues below for paid subscribers.