NVIDIA CES 2026: The Vera Rubin Platform and the Economics of AI Infrastructure

Note: I had the chance to attend both the CES 2026 Financial Analyst QA and the Industry Analyst QA, as well as time with management. I’m sharing nuggets learned from the QA and my meetings, as well as things from the NVIDIA CES 2026 announcements and management commentary that stood out and are telling about the competitive direction of the segment and NVIDIA.

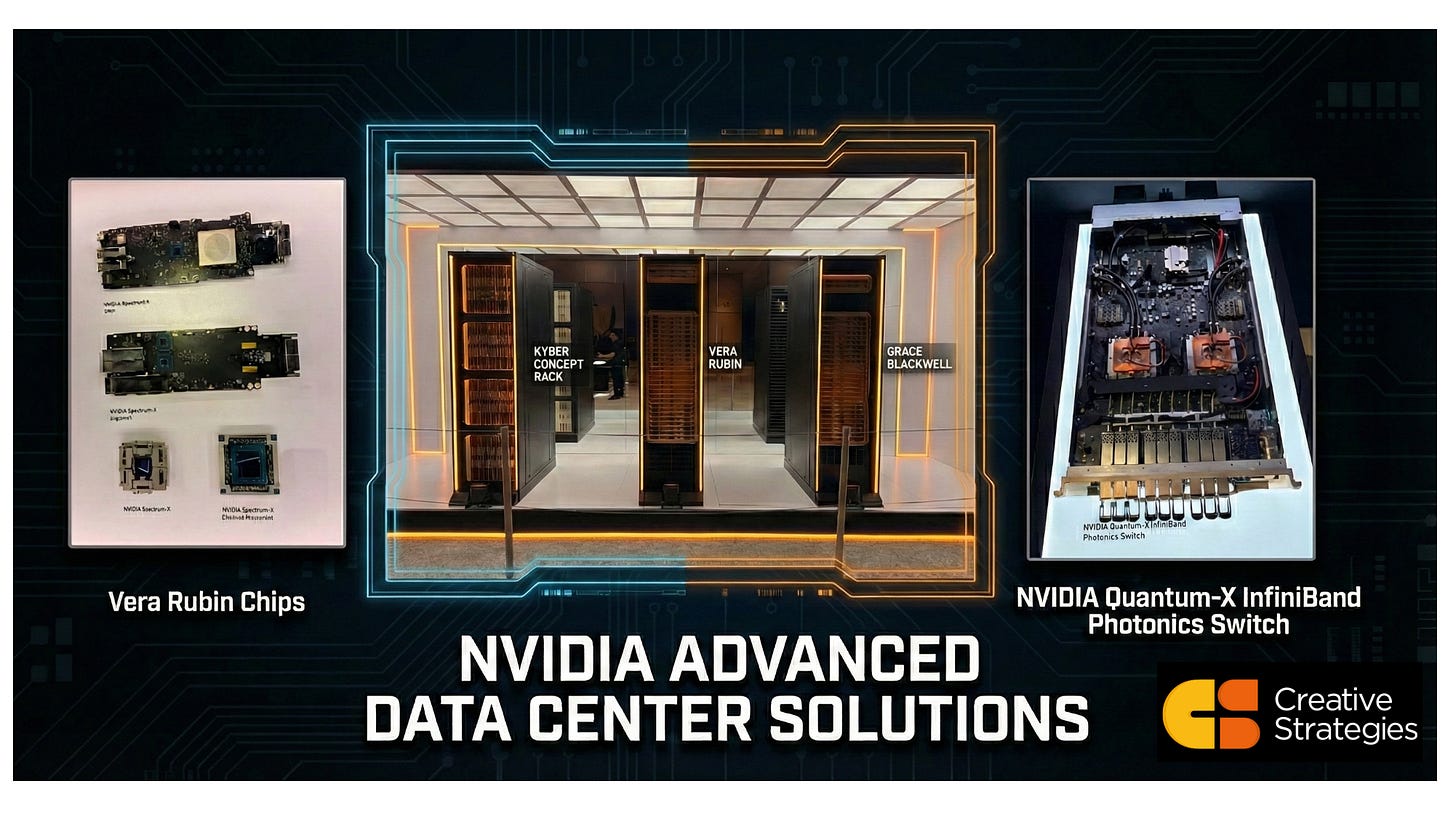

To the surprise of many, NVIDIA’s CES 2026 keynote officially launched the Vera Rubin platform—the company’s most comprehensive architectural refresh since Hopper. Launching at CES in January makes sense in the context of a nine-month timeline to launch, assuming no delays, which we do not currently expect. The Vera Rubin platform comprises six new chips engineered as an integrated system. A point Jensen went out of his way to make. This system-level co-design philosophy extends NVIDIA’s full-stack strategy to more core silicon and adjacencies, and now the true rack-scale (scale out) level, with each chip optimized for specific functions within the AI factory workflow. Jensen emphasized that all six chips are back from the fab, partner validation is underway, and the ecosystem is preparing for H2 2026 availability. Also noteworthy, management emphasized that customer conversations for Rubin are currently happening for 2027. More on that later.

Vera Rubin targets a scaling pressure that comes with sparse-routing MoE models: under expert parallelism, token routing introduces a bursty, often all-to-all communication phase that can become the binding constraint at rack scale. NVIDIA frames NVLink 6 accordingly—all-to-all connectivity across 72 GPUs with predictable latency as a “critical requirement” for MoE routing, collectives, and synchronization-heavy inference paths. Kimi K2 illustrates the mechanic in concrete terms, with 384 total experts and 8 experts active per token, and Google has publicly described Gemini 1.5/2.5/3 as MoE-based as well (GPT and Claude are likely MoE as well). NVIDIA designed Rubin to make sure communication doesn’t become a tax on MoE and similar routing-heavy models. NVLink 6 delivers 3.6 TB/s per GPU and ~260 TB/s of rack-scale bandwidth in an all-to-all NVL72 fabric, with hardware acceleration for collective operations—so token routing, synchronization, and context movement can run at rack scale without starving the GPUs. The takeaway is: Rubin is tuned for where frontier models are going—more routing, more coordination, and more time spent “thinking,” which makes the interconnect as decisive as the compute.

The economic claims remain a fundamental position for NVIDIA (and competitors) and a key justification for the annual product cadence. Management positioned the platform around three vectors: 4x fewer GPUs required for equivalent large MoE training throughput versus Blackwell, 7x lower token cost for large MoE inference at equivalent latency, and a cascade of operational efficiencies—power smoothing, zero-downtime maintenance, assembly time compression—that affect total cost of ownership for anyone building AI factories. The main point Jensen emphasized here was that customers who are power-constrained will seek to get the most tokens per watt, since tokens = $, and Rubin is purpose-built for that metric.

Jensen called out that four of the six new chips originated from NVIDIA’s Israel engineering team (I thought it was noteworthy that he went out of his way to make this comment)— the point: the Mellanox acquisition continues to pay architectural dividends. NVIDIA’s networking business could ramp to $70B+ by the end of the decade, if not sooner. Key assets from ConnectX-9 SuperNIC, NVLink Switch 6, Spectrum-X6 with CPO, and BlueField-4 (HUGE FAN OF DPUS!) represent networking capabilities that enable NVIDIA to move and compete on the now-required rack-scale level. The full-stack integration from silicon through software only deepens NVIDIA’s technical moats.

The most strategically significant announcement may be the one receiving the least attention: the Inference Context Memory Storage Platform. NVIDIA is creating an entirely new infrastructure tier purpose-built for KV cache storage in agentic AI workloads. The framing from management was explicit: “The bottleneck is shifting from compute to context management.” Long memory and storage.

This matters because inference is evolving. Simple (one-shot) chatbot responses are giving way to long-running agentic processes, thinking models, with multi-step reasoning and context windows extending to millions of tokens. The KV cache becomes a critical data type—too large for GPU HBM, too latency-sensitive for traditional network storage. NVIDIA’s solution, built on BlueField-4 and Spectrum-X, delivers claimed improvements of 5x tokens per second, 5x performance per TCO dollar, and 5x power efficiency versus traditional network storage for inference context. The design of superpod/rack cluster systems is something to watch.

Two points from management’s CES analyst discussions are worth highlighting because they signal where NVIDIA intends to extend its economic capture beyond accelerators. First, Jensen framed storage as a first-order constraint for agentic/long-context inference and highlighted an ecosystem push with incumbent storage vendors enabled by the BlueField-4 roadmap and NVIDIA’s “context memory” storage architecture. Second, he argued that CPU volume (NVIDIA’s CPU volume) will expand materially as rack-scale systems proliferate across compute, networking, and storage layers—an assertion that maps cleanly to the design choices in Vera Rubin NVL72, which integrates 36 Vera CPUs alongside 72 Rubin GPUs and 18 BlueField-4 DPUs. Technically, the Vera CPU is less about “replacing” x86 and more about maximizing GPU goodput (Goodput = delivered, end-to-end useful work): 88 custom Olympus cores sit behind a large, high-bandwidth memory subsystem (up to 1.5TB LPDDR5X / ~1.2 TB/s) and a coherent CPU-GPU fabric (NVLink-C2C at 1.8 TB/s), creating an additional tier for KV-cache management/offload, data staging, and control-plane orchestration so context growth doesn’t translate into idle accelerators. The TAM expansion Huang outlined is best modeled as a stack expansion—GPUs remain the main compute engine, but CPUs, DPUs, and AI-native storage increasingly define system performance and therefore become monetizable layers where NVIDIA can capture a larger share of total rack economics.

The Q&A session with analysts reemphasized Jensen’s market sizing framework: $10 trillion of global IT infrastructure requiring “re-modernization from classical to AI,” with NVIDIA currently capturing “a few hundred billion dollars.” Add the $100 trillion labor market as AI transitions from tool to labor augmentation—robotics, autonomous vehicles, software agents—and the opportunity becomes multi-decade and foundational, like a buildout, rather than cyclical. We agree, the stacking of AI cycles from datacenter to autonomy with physical AI is an undersappreciated dynamic for the scale of growth that is coming.

Competitive positioning remains confident but not dismissive. When asked about Huawei and Chinese competition (full deep dive on Chinese competition coming soon), Jensen redirected to NVIDIA’s unique full-stack integration: “We’re the only company in the world that builds literally everything from the CPU all the way to now storage. (he did clarify here in a follow-up comment that they don’t build storage, just that it’s designed into their solution.)” The closing message to competitors: “I look forward to your competition. You’re going to have to work hard.”

As we evaluate AI infrastructure exposure, Vera Rubin clarifies NVIDIA’s strategic trajectory: deeper vertical integration, expanding TAM through new infrastructure tiers, and architectural advantages that compound with each generation. NVIDIA's technology leadership appears durable through the Rubin generation. Although we look forward to analyzing the competition’s rack-scale approaches as well.

Eye Candy From CES:

What Subscribers Get in the Full Analysis

The complete Vera Rubin analysis available to Diligence Stack subscribers includes:

Platform Architecture

6 new chips and the significance of a fresh system architecture design

Vera CPU as the “sleeper”—how NVIDIA anticipated context scaling before the market

The Rack scale shift: why system integration beats point GPU benchmarks

Performance Economics

The denominator game: how NVIDIA shifts TCO comparison from compute to full system

Power smoothing math: 25% stranded capacity elimination at hyperscale. This has an impact on data center power as well.

Why CPO networking is an architectural transition, not an incremental improvement

Rack-Scale Economics

Abstraction shift from GPU to rack-as-processor and switching cost implications

Good-put over throughput: why serviceability metrics matter more than specs

Assembly compression (2hr → 5min) as supply chain velocity multiplier

Inference Context Memory

Category creation: why this is a flash-for-databases moment for AI inference

TAM expansion without capex: the asset-light partnership model

The CPU proliferation signal Jensen buried in the Q&A