Memory's $200B Inflection

Anatomy of a structural shift and why the memory industry may never be the same

The memory semiconductor industry went from roughly $85–90 billion in revenue at its 2023 trough to a trajectory that reaches $800–850 billion by 2027. That is roughly a 9× increase in four years. No segment of the semiconductor industry has ever grown this fast at this scale. The numbers forecasted, at approximately $550–570 billion in 2026, memory alone would represent roughly 55–58% of the total global semiconductor market, which was a share that was 25–30% for the entirety of modern chip history.

The numbers behind this cycle are unlike anything the industry has seen. DRAM pricing is on track to increase roughly 275–300% from 2025 through 2027, more than tripling the ~90% rise during the legendary 2017–18 super cycle, and it is happening at roughly three times the revenue base. Conventional DRAM ASPs are approaching $1.20–1.30 per gigabit, which is converging with what HBM3E 12-Hi commands today. The premium for the most advanced memory in the world is collapsing from the bottom up, because conventional DDR5 has become so scarce that its price is rising to meet HBM. Meanwhile, mobile NAND demand is expected to be flat year over year in 2026, which is the first time that has happened in the history of the NAND industry. Smartphones are being de-specced, and PC shipments are falling because OEMs simply cannot absorb the cost of memory.

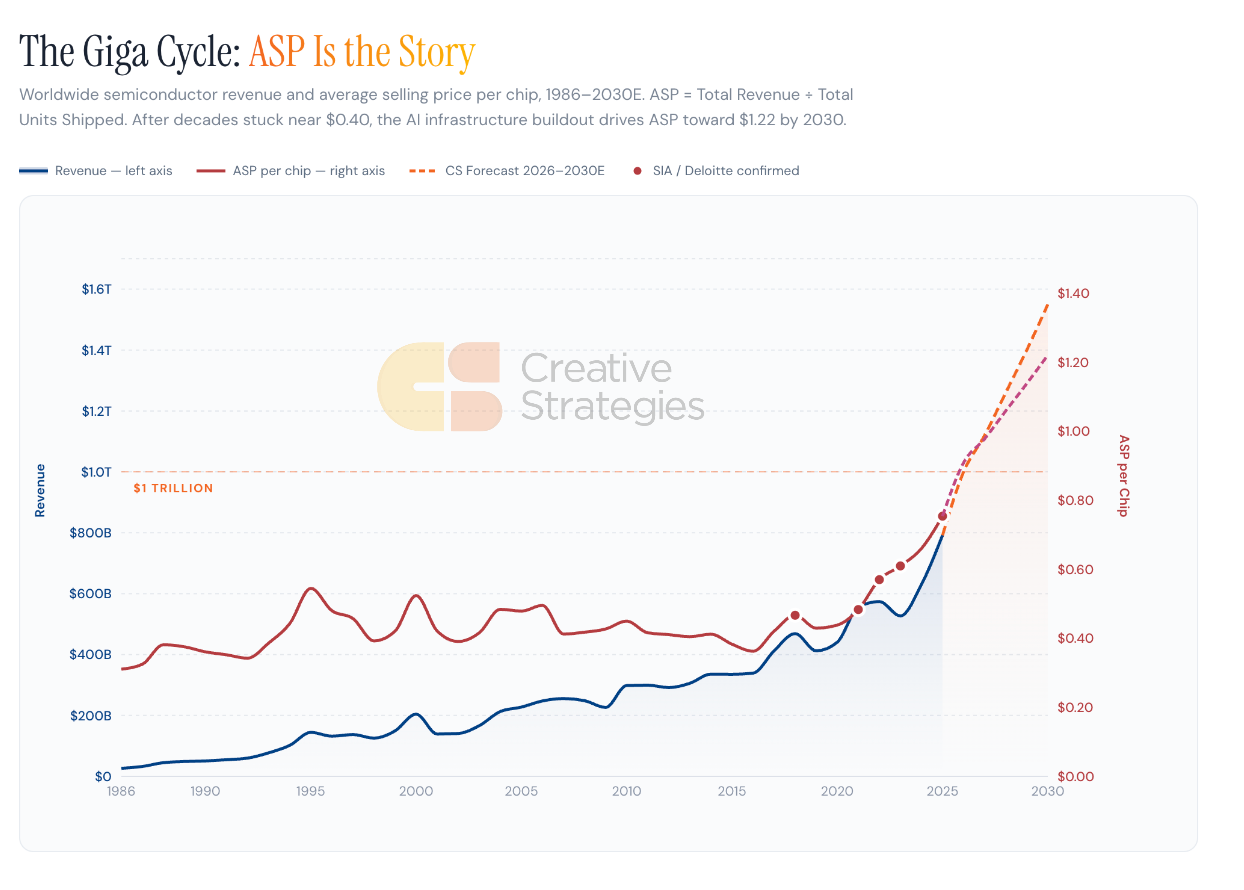

Memory is the primary driving force pushing the semiconductor industry past the long-awaited trillion-dollar revenue mark years in advance of the 2030 target. The industry will add roughly $208 billion in a single year from 2025 to 2026, more than the entire global semiconductor market generated annually as recently as 2003. What makes this cycle structurally different from every prior expansion is the source of that growth. From 1986 to 2020, the average selling price of a semiconductor oscillated in a $0.31–$0.44 band for 34 consecutive years. It has since doubled in just five years, reaching an estimated $0.91 in 2026 and tracking toward $1.22 by 2030. Unit volumes are projected to grow at roughly 4% annually through the end of the decade, yet revenue is compounding at 14%. The gap is entirely ASP. This is the first sustained period in industry history where pricing power, not volume, is the primary engine of value creation, and memory, particularly HBM and advanced DRAM, sits at the center of that shift.

The AI demand engine is the single driver of these dynamics. Our analysis shows that if text-only AI inference were to scale unconstrained, the addressable memory demand from that single application category would approach 30–35% of 2026 global DRAM supply and could theoretically exceed 90% of NAND supply (see Assumptions appendix for how we bound this with adoption and capacity assumptions). Those are theoretical ceilings, not realizable consumption, but they illustrate the magnitude of the demand shock. It does not include image, video, or multimodal workloads. It does not include the emerging agentic AI systems that require persistent context, long-term memory, and KV Cache storage that scales linearly with users and conversation length. A typical AI server uses roughly eight times more memory than a traditional server, and AI server memory spend is on track to go from $35–40 billion in 2025 to $175–190 billion by 2027 — roughly a five-fold increase in two years.

On the supply side, the constraints are physical (clean room space) and cannot be resolved quickly. New fabs from Samsung and SK Hynix will not ramp before the second half of 2027. Micron has announced a $200 billion long-term U.S. investment plan spanning Idaho, New York, and Virginia, but the first new fab (Idaho) does not begin DRAM production until mid-2027, and the New York fabs broke ground only in January 2026 — years from meaningful output. Micron has also committed $9.6 billion to a new facility in Hiroshima targeting AI memory shipments by 2028. These are massive commitments, but they underscore rather than relieve the near-term constraint: even the most aggressive investor in the industry cannot add meaningful new supply before 2027–2028 at the earliest. Total DRAM wafer starts are growing roughly 6–8% year over year, exiting 2026. Every wafer allocated to HBM is a wafer that does not produce conventional DDR5, creating a crowding-out effect that pushes conventional pricing even higher. DRAM inventory at major producers has fallen to roughly 3–4 weeks, well below normal levels. Some customers are already requesting memory allocation for 2028. We are in February 2026, and the industry is negotiating supply for a year that is two years away.

The financial impact is already reshaping the economics of computing. Memory now accounts for 65–70% of a standard data center server’s bill of materials, up from roughly 45–48% a year ago. A server that cost around $6,000–6,500 in memory last year now costs ~$15,500–16,500. For NVIDIA’s next-generation VR200 racks, per-rack memory costs are estimated to appraoch roughly 15–18% of total rack cost. Memory is adding roughly $100 billion per year to hyperscale capital expenditure budgets. On the consumer side, memory (DRAM and NAND combined) now represents 23–25% of an iPhone Pro’s component cost and 40–42% of a lower-end smartphone’s cost. The memory tax is real, it is large, and it is being paid across every layer of the technology stack.

For the three major producers, margins are at or approaching all-time highs and still climbing (Earnings call word of the year is “margin expansion”). SK Hynix is running conventional DRAM operating margins estimated in the high-70s% range — the highest in the company’s history. Samsung is in the low-70s. Hynix’s NAND operating margin in the mid-40% range would also be a record. And by our assessment, memory industry profits are not expected to peak before Q4 2027. Three preconditions for a cycle peak — trough inventory, peak year-over-year pricing, and stock price inflection — remain unmet as of February 2026. The cycle peak, in our view, is still distant.

Three stats from our analysis that capture the magnitude of what is happening:

Memory revenue in 2026 will roughly equal the entire global semiconductor industry in 2022. One sub-segment of chips now matches what all chips combined — logic, analog, memory, everything — generated just four years ago.

The server has become a memory appliance. Memory’s share of a standard server’s bill of materials went from roughly 30% to 65–70% in under two years. The compute portion has gone from majority to minority. That structural inversion has never happened before.

Every HBM wafer kills roughly 3x its equivalent in conventional DRAM. HBM uses ~3x the silicon area per gigabyte. So the ~100–120 KWPM of incremental front-end allocation moving to HBM effectively removes 300–350 KWPM equivalent of conventional output. That’s the hidden mechanism driving DDR5 pricing into HBM territory.

The “memory tax” on hyperscalers exceeds the GDP of roughly 130 countries. At $140–160B in BOM-level memory cost across hyperscale servers, the annual memory bill exceeds the entire economic output of most nations.

Subscribe for the Full Report

The full report goes deep on the numbers behind this cycle with proprietary estimates, competitive frameworks, and the data we use to build our convictions. Subscribers receive:

▸ Detailed DRAM supply/demand modeling with pricing forecasts through 2027, including conventional DRAM ASP trajectories and the convergence with HBM pricing

▸ HBM competitive landscape breakdown covering market share, revenue, capacity, customer concentration, and the technology roadmap from HBM3E through HBM4

▸ Proprietary operating margin estimates for Micron derived through triangulation of peer data, HBM capacity ratios, and historical margin relationships

▸ NAND market analysis, including the impact of NVIDIA’s ICMSP platform, enterprise SSD demand, and the 29 exabyte incremental demand opportunity

▸ Competitive dynamics for Samsung, SK Hynix, and Micron with company-level revenue, margin, and capacity analysis

▸ BOM cost impact analysis quantifying how memory pricing is reshaping server economics, hyperscale capex, and consumer device pricing

▸ Industry revenue outlook tables with company-level HBM and DRAM revenue forecasts through 2027

▸ Risk assessment covering demand destruction thresholds, greenfield capacity timing, China’s CXMT, and the ASIC vs. GPU demand mix